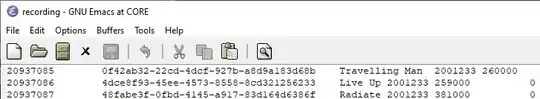

I have a plain text data file, consisting of 28 million tab-delimited records, each containing nine fields. The left ends of the first three records look like this:

I would like to truncate each of those records after the first four fields. That is, I don't need fields five through nine. After truncation, the records in the image (above) would look like this:

20937085 0f42ab32-22cd-4dcf-927b-a8d9a183d68b Travelling Man 2001233

20937086 4dce8f93-45ee-4573-8558-8cd321256233 Live Up 2001233

20937087 48fabe3f-0fbd-4145-a917-83d164d6386f Radiate 2001233

I think the last time I used Emacs for anything substantial was around 1983. I have missed it. For better and for worse, I was distracted by the arrival of the IBM PC. That, or the sheer passage of time, may have had a deadening effect upon the portion of the intellect previously devoted to a different sort of computing.

For whatever reason, Emacs is now a largely foreign language to me. But I think it may provide the only solution within my reach at present.

If anyone can give me a nudge toward a means of automating the removal of fields five through nine from the right ends of those 28 million records, it would be most appreciated.